Paradox

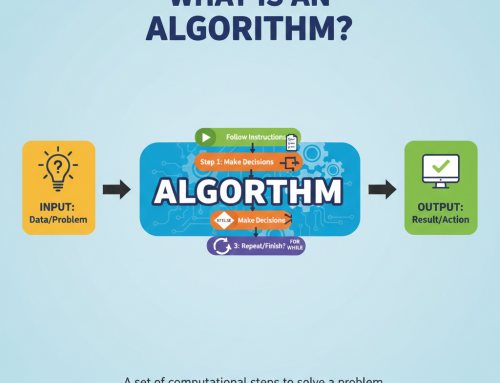

When we consider that very few people understand how AI works. Even those that do understand the mechanism don’t know how the internal decisions it makes are arrived at. This can make people very nervous when we give it the power to make decisions on our behalf. This is the black box paradox. We know all the maths, the coding, and the data that goes into an AI model, but how it arrives at the prediction is impossible to fathom.

Tweaking

The problem starts at the beginning because it starts with random weights. It is then trained, and those weights are refined as it matches the input data to the output data. On each iteration, each guess reduces the error between the predicted and the correct result. There can be lots of hidden layers, lots of nodes, lots of data, and just lots of everything really, many calculations, endless tweaking, and so on.

There are a number of hyperparameters we can change to improve things. We make sure the data is as good as it can be. Then it is often a matter of switching it on and seeing what happens. Experts in developing AI models have some very good insights into what would make a good model, but even then, it takes time and a degree of guesswork, often more art than science.

Seeing Cats and Dogs

Imagine a black box where the AI is inside beavering away at the data it has just been given. It has no concept of the outside world or even what the data means. All it will see is a lot of numbers which are meaningless in themselves. You show it millions of pictures of cats and dogs to classify, and it still doesn’t know what a dog or cat is actually like.

The AI sits in a dark room trying to get the best result with whatever data it has available. It can do it fast, it can do it relatively efficiently, and when trained, it stops and makes guesses or predictions. Possibly whether the next picture is of a cat or dog based on probability. Some models may receive data in real time, think of a driverless car or a robot.

Impulses

It can have a camera, lidar, or other sensors to see around it. It can have a microphone to hear sounds or commands, and as far as I am aware, they can mimic our five senses to some degree as research continues around the world. So you could understandably conclude that if you attach a camera to an AI model, it can see. If it can see, it can learn.

Yet, all it is getting is data in the form of numbers (electrical impulses). It then processes this and uses the information to make some prediction, for instance, tell a car to avoid an object in the road. It is all electrical impulses being converted into meaningful data, but it cannot actually see the object in the road.

Our brain is also a black box

When people get worked up about super intelligent AI taking over the world, others say it is just a bunch of zeros and ones (which in essence it is). But our brains also sit inside our skulls, in darkness (black box), and receive data/information in the form of electrical impulses from our five senses. The brain has neurons that fire or not fire, which are tiny electrical impulses.

We think our brains can see with our eyes, but all the brain does is get information in the form of zeros and ones, which it converts into images in our minds. If we are driving a car, we see the same object in the road; we drive around it just in the same way that the driverless car would.

Concluding Thoughts

We could conclude that one day a computer could think like us or be even more intelligent than us. It is possible that it might exhibit feelings and emotions, as some already believe. At what point will it become self-aware? If it starts writing its own code, it could improve itself, evolving exponentially. Our brains have billions of neurons. This is not matched by even the largest computer. We walk around all day with it sat on our shoulders. We can watch daytime TV and eat our bacon butties. So, I wouldn’t worry too much, at least not yet!

Photo by Cameron Shurley on Unsplash